Several tools have been, or are in the process of being, developed to provide an automated way to determine compliance against the FAIR data principles (Wilkinson et al, 2016). As the FAIR principles are advisory and not prescriptive, those interested in performing automated assessments of the FAIR principles have for each principle proposed one or more abstract criteria to evaluate whether a FAIR principle has been met. These criteria are referred to as metrics, such as the metrics for data started by the FAIRsFAIR initiative and the metrics for software assessment developed by the FAIR-IMPACT project.

To perform an automated assessment of a principle, each metric has to be turned into one or more specific tests. This is a practical way of checking whether the metric is satisfied. Finally, the tests can be scripted so that they can be used by the corresponding tool to evaluate the level of FAIR compliance.

FAIR principles are being developed for other specific types of digital objects, such as computational workflows, ontologies, etc. In particular, groups involved in developing automated assessment tools for the FAIR4RS principles (Chue Hong et al, 2022) are in the process of establishing metrics to be able to assess the FAIRness of Research Software and incorporate these into the same tooling that has been developed to assess FAIR data.

FAIR-IMPACT partners examined the application and potential repurposing of three existing automated assessment tools built to assess FAIR data principles (Wilkinson et al, 2016), to assess compliance with the FAIR for Research Software (FAIR4RS) principles (Chue Hong et al., 2022).

The tools examined

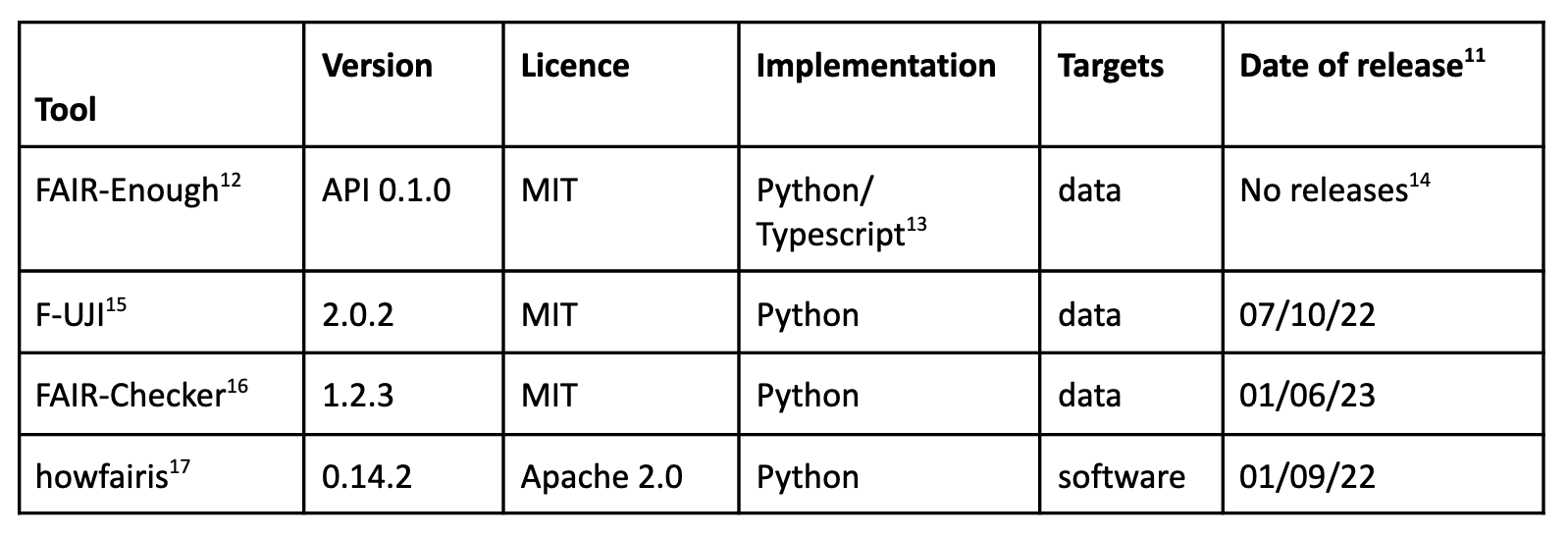

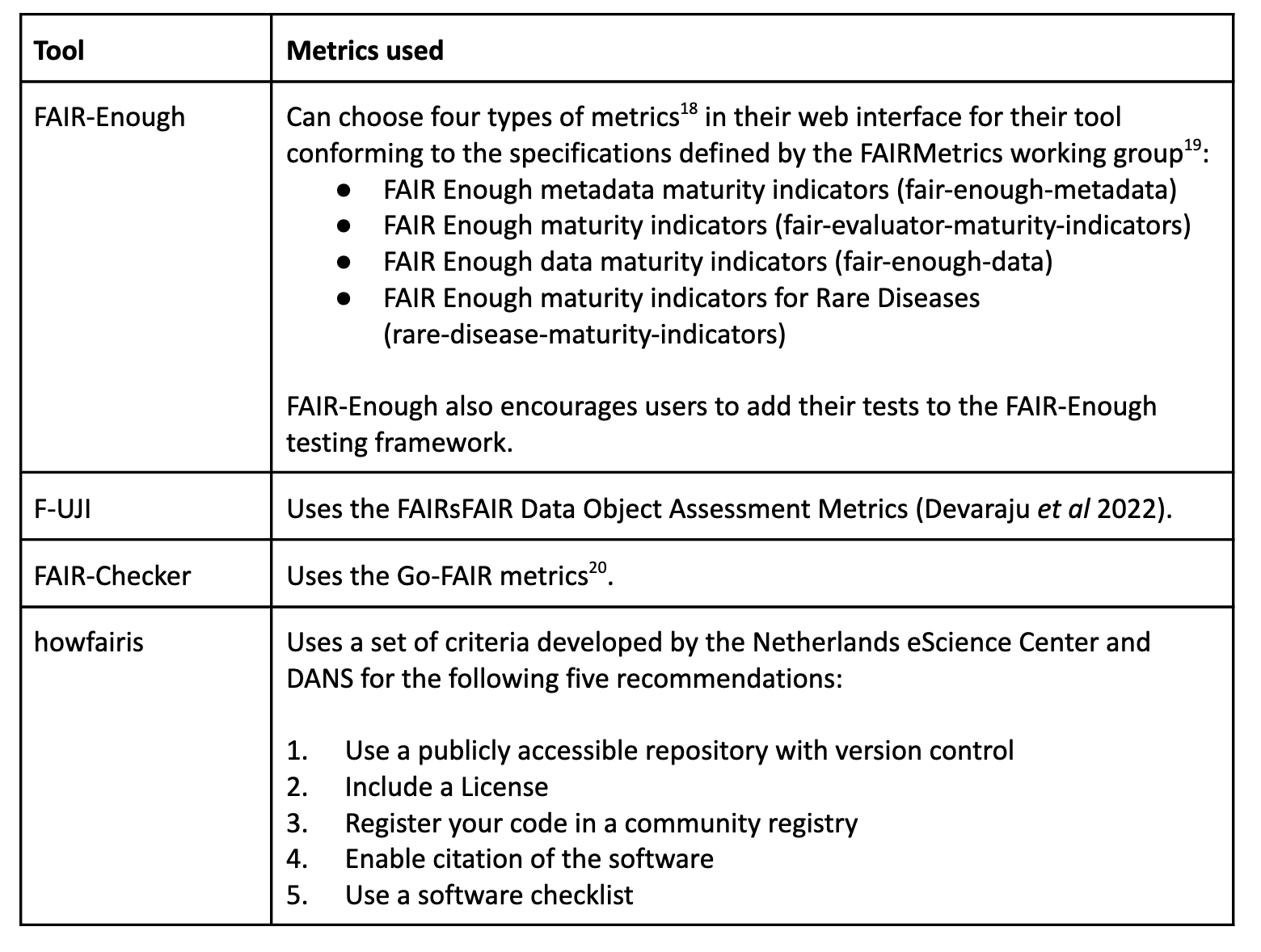

Four FAIR assessment tools have been examined. Three of these tools were originally developed to test compliance with the FAIR data principles, FAIR-Enough (Emonet

and Dumontier), F-UJI (Devaraju and Huber, 2021) and FAIR-Checker (Gaignard et al, 2023). The fourth tool, howfairis (Spaaks et al, 2022), targets software but does not explicitly use

the FAIR4RS principles in its assessment; instead, it relies on five recommendations for FAIR software developed by the Netherlands eScience Center and the Data Archiving and Networked Services (DANS), a Dutch centre that advises on the sustainable storage and sharing of data, and the coordinator of the FAIR-IMPACT project. The source code for all of these tools are available on GitHub.

The evaluations were carried out between April and July 2023. Each tool used different metrics used to make the FAIRness assessment.

Read the report

The report, which is an output of the FAIR-IMPACT work on "Metrics, certification and guidelines" is now available for download from the FAIR-IMPACT community on Zenodo:

Antonioletti, M., Wood, C., Chue Hong, N., Breitmoser, E., Moraw, K., & Verburg, M. (2024). Comparison of tools for automated FAIR software assessment (1.0). Zenodo. https://doi.org/10.5281/zenodo.13268685